1GB THP support in Linux (2)

We talked about 1GB THP overall design and how to allocate 1GB pages in the system. I am going to continue on more 1GB THP designs.

Page Table Setup for THP and 1GB THP

One of the reasons why THP is called transparent is that user applications can initially access a THP via higher level page table entries (e.g., PMD or PUD) and then unmaps the THP partially retaining the access to the part of the THP still mapped by lower level page table entries (e.g., PTE or PMD). Taking a PMD THP as an example, it is done by mapping the THP with a PMD initially; when user unmaps the THP partially, the PMD is replaced by multiple PTEs. The process is majorly done in split_huge_pmd(), which calls __split_huge_pmd() for the actual operations. To help this, a page table deposit and withdrawal mechanism is implemented.

Page table deposit and withdrawal for THP

The rationale of implementing this mechanism is that splitting a PMD requires an additional page for PTE page table page and a THP might be split under memory pressure, which might not be able to offer a new page to kernel. Thus, kernel preallocates a PTE page table page for each THP when kernel maps the THP with a PMD (similarly, 1 PMD page table page and 512 PTE page table pages are preallocated for each 1GB THP when kernel maps the 1GB THP with a PUD). The preallocated page table pages are deposited and later withdrawn when kernel chooses to map the THP with PTEs:

-

For page table deposit, in __do_huge_pmd_anonymous_page() (from handle_mm_fault() -> __handle_mm_fault() -> create_huge_pmd() -> do_huge_pmd_anonymous_page()), when a THP is allocated for a page fault, the PTE page table page is allocated

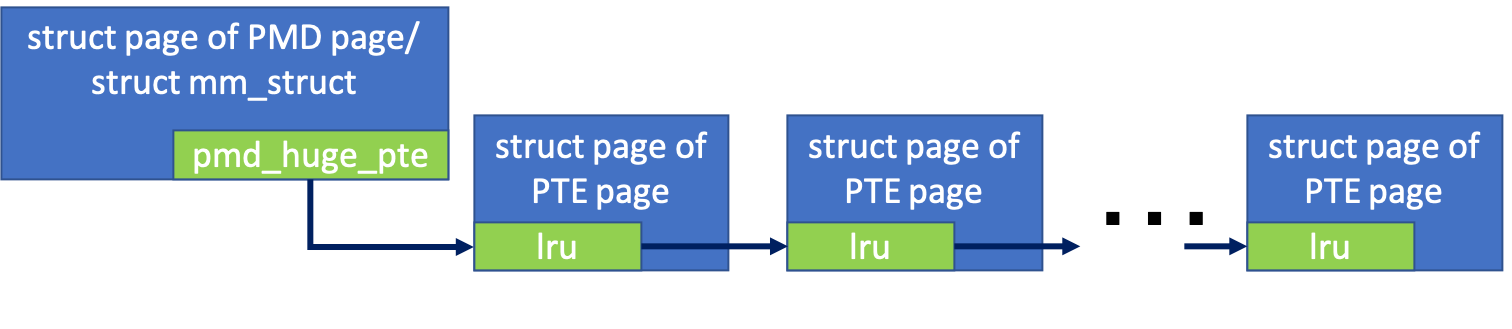

pgtable = pte_alloc_one(vma->vm_mm);and depositedpgtable_trans_huge_deposit(vma->vm_mm, vmf->pmd, pgtable);using pgtable_trans_huge_deposit(). In x86_64, that PTE page table page is stored in a doubly-linked list whose first entry is pointed by a fieldpmd_huge_ptefrom thestruct pageof the PMD page table page whenUSE_SPLIT_PMD_PTLOCKSis set (namely every PMD page table page is protected by a separate lock), otherwise (!USE_SPLIT_PMD_PTLOCKS), a fieldpmd_huge_ptefromstruct mm_structis used instead (namely all deposited page table pages are stored in a single place and all PMD page table pages from one process address space are protected by a single lock). Since each PMD page table page has 512 PMD entries, there could be up to 512 PTE page table pages deposited in one PMD page table page (pointed bypmd_huge_pte).

-

For page table withdrawal, in __split_huge_pmd_locked() (called in __split_huge_pmd()), when a PMD is split into multiple PTEs, kernel withdraws the deposited page table page

pgtable = pgtable_trans_huge_withdraw(mm, pmd);using pgtable_trans_huge_withdraw() and uses it for making new PTEs.

Architectures like IBM System/390 have their own way of managing page table deposit and withdrawal, which might be different from what is described above for x86_64.

Using the mechanism above, kernel is able to split a PMD entry into mutliple PTE entries when a THP gets partial mapping. User applications do not need to get new virtual addresses if they just unmap part of a THP. However, this mechanism might be suboptimal when we have 1GB THP, which requires depositing more page table pages in different page table levels.

Page table deposit and withdrawal for 1GB THP

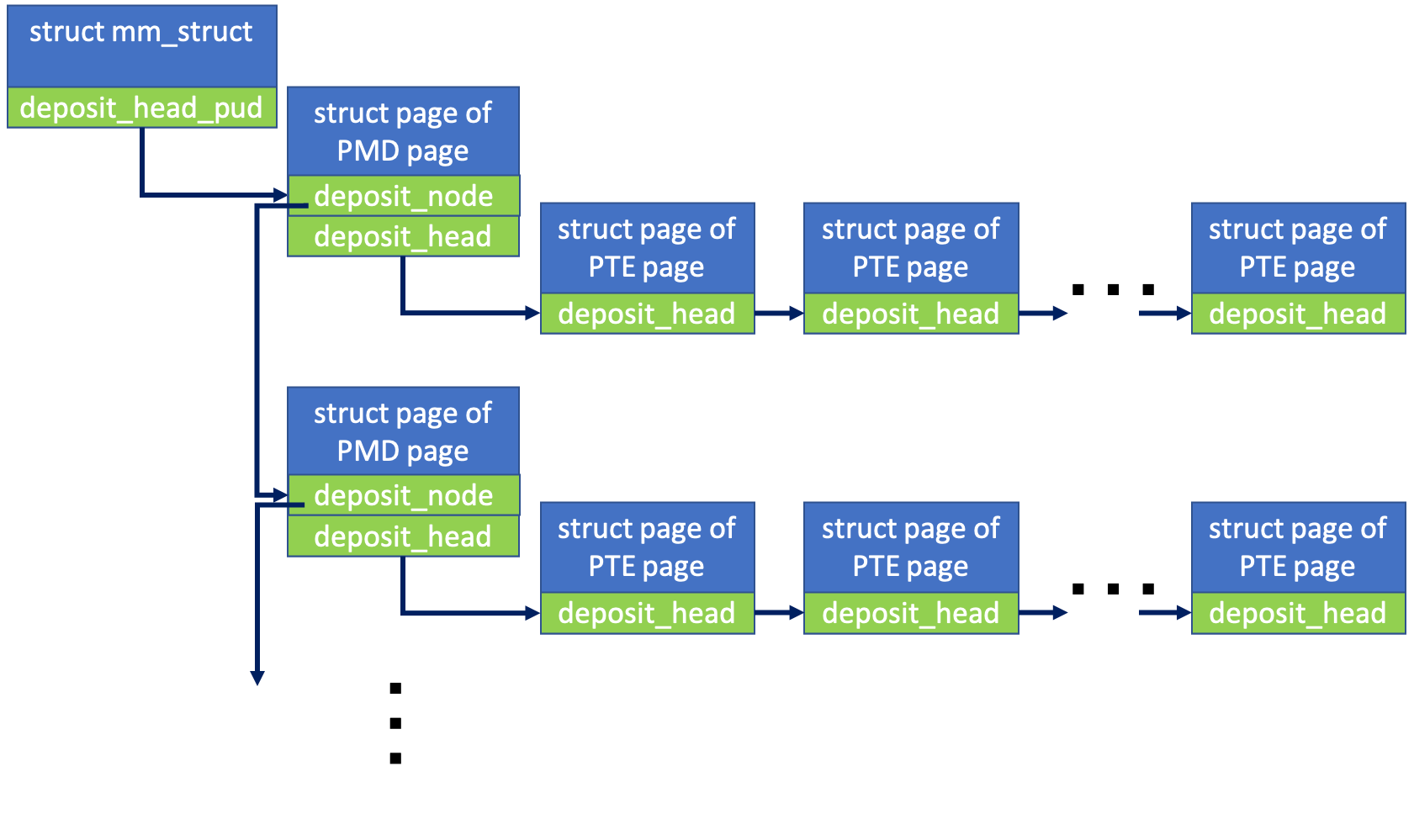

For 1GB THPs, kernel needs to deposit 1 PMD page and 512 PTE pages for each, if the THP is initially mapped by a PUD entry. Kernel now needs to deposit two kinds of page table pages, which might not be handled well by the existing mechanism.

Existing mechanism

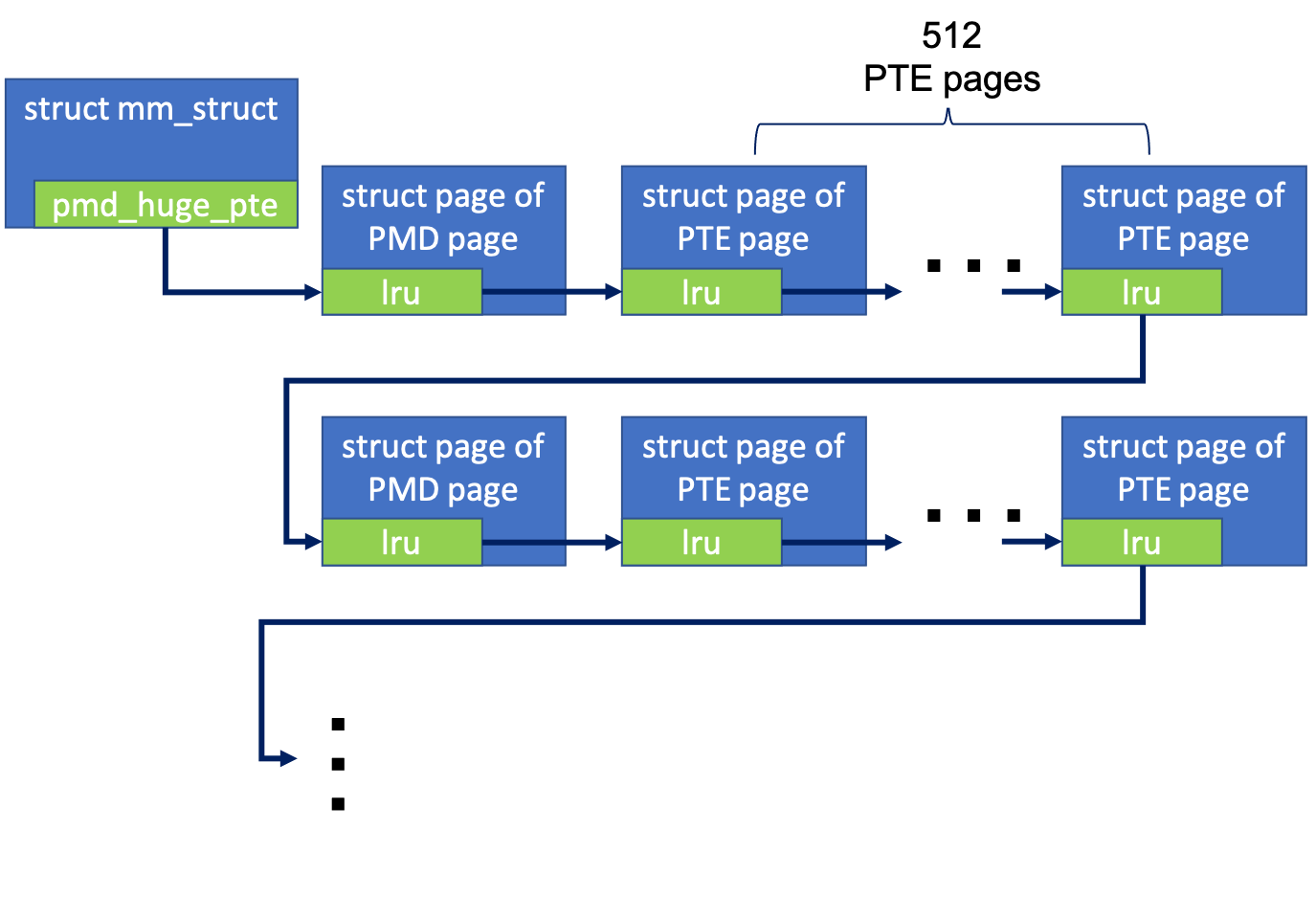

If we are using the mechanism from THP page table page deposit, we will have a long list storing a mix of PMD and PTE page table pages.  This is suboptimal because PMD and PTE pages are mixed together, which is error-prone and during page table page withdrawal kernel needs to do more work by first withdrawing 1 PMD page and 512 PTE pages then depositing 512 PTE pages to the PMD page.

This is suboptimal because PMD and PTE pages are mixed together, which is error-prone and during page table page withdrawal kernel needs to do more work by first withdrawing 1 PMD page and 512 PTE pages then depositing 512 PTE pages to the PMD page.

New mechanism

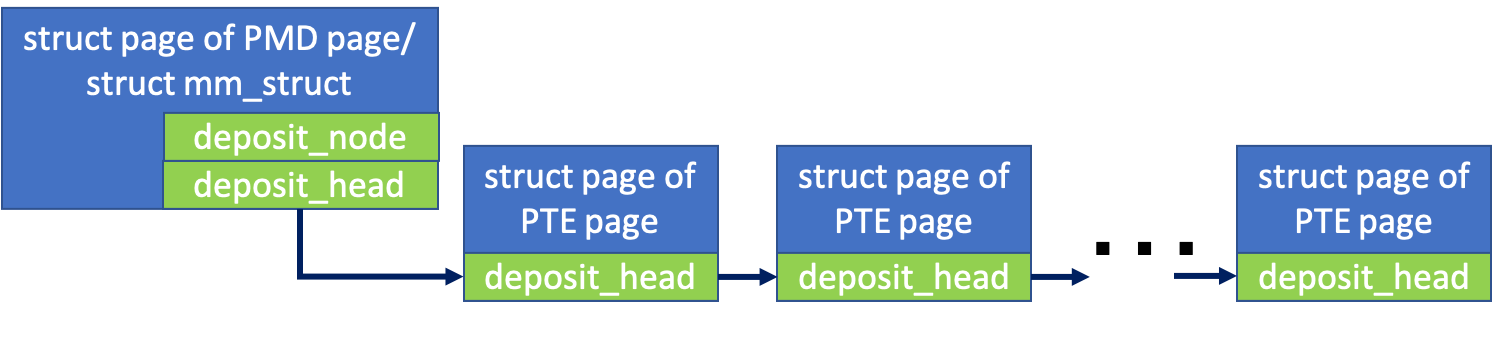

Instead, I made the changes to provide a better support for page table page deposit with multiple kinds. The old design uses a doubly-linked list page->lru and page->huge_pmd_pte for all deposited pages, which is not necessary, since a singly-linked list would suffice. We just need to replace the doubly-linked list page->huge_pmd_pte with two singly-linked lists page->deposit_head and page->deposit_node.

For 1GB THP, these changes enable kernel to chain all page table pages from the same level in the same list to make a hierarchical page table page deposit.  In this way, when kernel withdraws page table pages for 1GB THP, it can just pull a PMD page out and place it in the page table without worrying about subsequent PTE page table page withdrawal, since the PTE pages are already deposited in the right place at the beginning.

In this way, when kernel withdraws page table pages for 1GB THP, it can just pull a PMD page out and place it in the page table without worrying about subsequent PTE page table page withdrawal, since the PTE pages are already deposited in the right place at the beginning.

With the new page table page deposit and withdrawal mechanism, kernel is able to split a PUD entry that maps a 1GB THP transparently to user space.

Leave a Comment